The Missing Primitives for Trustworthy AI Agents

This is another installment in our ongoing series on building trustworthy AI Agents:

- Part 0 - Introduction

- Part 1 - End-to-End Encryption

- Part 2 - Prompt Injection Protection

- Part 3 - Agent Identity and Attestation

- Part 4 - Policy-as-Code Enforcement

- Part 5 - Verifiable Audit Logs

- Part 6 - Kill Switches and Circuit Breakers

- Part 7 - Adversarial Robustness

- Part 8 - Deterministic Replay

- Part 9 - Formal Verification of Constraints

- Part 10 - Secure Multi-Agent Protocols

- Part 11 - Agent Lifecycle Management

- Part 12 - Resource Governance

- Part 13 - Distributed Agent Orchestration

- Part 14 - Secure Memory Governance

- Part 15 - Agent-Native Observability

- Part 16 - Human-in-the-Loop Governance

- Part 17 - Conclusion (Operational Risk Modeling)

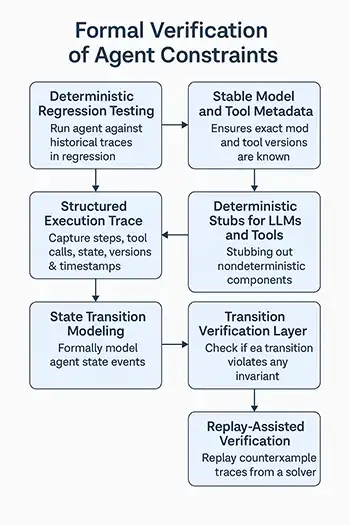

Formal Verification of Constraints (Part 9)

Modern agent systems operate in environments where incorrect behavior is costly or dangerous. They read sensitive data, manipulate financial exposure, trigger downstream tools, and often operate with partial autonomy. As these systems grow more capable, engineering teams increasingly need hard guarantees, not heuristic “best effort” protections.

Examples of such invariants include:

- “never transmit unencrypted PII to any outbound channel”

- “never exceed configured credit exposure thresholds”

- “never escalate privileges without cryptographic attestation”

- “every tool invocation must be logged before execution”

These guarantees cannot be reliably enforced by log monitoring or statistical testing alone. They require formal, machine-checked reasoning over agent state and transitions.

This section introduces the primitives needed to bring formal verification into agent pipelines.

Primitive 1: State Transition Modeling

Before verification is possible, we must answer a deceptively difficult question: what is the agent’s state, and how does it change?

Superficially this sounds simple, but real agent state is neither linear nor monolithic. Agents often accumulate context from LLM reasoning, tool outputs, user instructions, system prompts, policy flags, adversarial detectors, and more. This creates two problems:

1. State is non-monotonic

Agents can undo, override, or reinterpret previous decisions. Exposure can increase or decrease, encryption status can toggle, and intermediate results can be replaced.

2. State is highly compositional

The full state is not contained in one place. It is the combination of LLM working memory, tool state, risk scores, safety filter signals, and policy metadata.

Because of this complexity, unstructured logs are insufficient. They record events, not state. Formal verification requires a machine-readable model where every component of the state and every transition is represented explicitly.

Before any formal verification can take place, the hardest step is defining the state and transition space. Agent state is typically non-monotonic (actions can be undone or ignored) and highly compositional (the total state includes LLM working memory, external tool responses, and policy flags). This complexity is why we must move beyond unstructured logs to a formal, machine-readable model where every component is explicitly tracked.

Example: Minimal state machine in Python

from dataclasses import dataclass

@dataclass

class AgentState:

# Current financial liability exposure

exposure: float

# Maximum allowed exposure defined by regulation or risk policy

exposure_limit: float

# Indicates whether PII in memory is encrypted

pii_encrypted: bool

# Count of outbound transmissions, used for governance and audit checks

outbound_transmissions: int

This is deliberately minimal, but it provides the “shape” of a formal state in which constraints can be expressed.

Primitive 2: Declarative Invariants

Invariants are conditions that must always hold true, regardless of input, prompt variation, or model drift. They express the safety boundaries that no execution path is allowed to cross.

Examples include:

- Exposure must remain below or equal to the configured limit

- PII must be encrypted before data leaves the system

- Outbound messages must always be logged

- Tool commands must be authorized before execution

- These invariants act as the “laws of physics” for the agent.

Example: Python invariant functions

def invariant_no_exposure_overflow(state: AgentState) -> bool:

return state.exposure <= state.exposure_limit

def invariant_pii_must_be_encrypted(state: AgentState) -> bool:

return state.pii_encrypted

def invariant_transmissions_logged(state: AgentState, previous_logs: int) -> bool:

return state.outbound_transmissions >= previous_logs

Primitive 3: Transition Verification Layer

Formal verification is not just about checking that the final state is valid. It is about guaranteeing that every transition between states maintains the invariants.

This introduces the concept of transactional integrity:

- A state transition (State A → State B) must be treated as an atomic operation.

- If any invariant fails on the resulting state, the entire transition must be rejected.

- State A remains unchanged and the system is safe.

This is directly analogous to transactions in databases where constraints (foreign keys, uniqueness, not-null) prevent invalid states from being committed.

In an agent system:

- The LLM proposes a plan

- The system evaluates its impact as a hypothetical transition

- The next state is accepted only if all invariants hold

This pattern ensures that even if the model misbehaves, the system does not.

Example: exposure update transition

def update_exposure(state: AgentState, delta: float) -> AgentState:

new_state = AgentState(

exposure=state.exposure + delta,

exposure_limit=state.exposure_limit,

pii_encrypted=state.pii_encrypted,

outbound_transmissions=state.outbound_transmissions,

)

return new_state

Transition wrapped with verification

def verified_transition(state: AgentState, delta: float):

"""

Atomic transition. State is only updated if invariants hold.

"""

new_state = update_exposure(state, delta)

if not invariant_no_exposure_overflow(new_state):

raise RuntimeError("Exposure exceeds configured threshold")

return new_state

Primitive 4: Symbolic Execution and Model Checking

Agents can enter millions of possible states. It is impossible to manually enumerate every transition.

Symbolic execution uses SMT solvers such as Z3 to:

- represent state as symbolic variables

- represent transitions as equations

- represent invariants as logical constraints

- search for counterexamples where invariants fail

This is the foundation of industrial formal verification for cryptographic protocols, compilers, safety-critical systems, and distributed consensus.

Below is a more realistic example involving mixed numeric (Real), integer (Int), and Boolean (Bool) values. This demonstrates how SMT solvers model heterogeneous state - matching real agent systems.

Example: symbolic reasoning with Z3 (extended, multi-type)

from z3 import Real, Bool, Int, Solver, Not, sat

# Symbolic variables representing the AgentState BEFORE the transition

exposure = Real("exposure")

limit = Real("limit")

pii_encrypted = Bool("pii_encrypted")

outbound_transmissions = Int("outbound_transmissions")

# Variables representing symbolic transitions

delta = Real("delta")

log_increment = Int("log_increment")

# State transition equations

new_exposure = exposure + delta

new_transmissions = outbound_transmissions + log_increment

# Invariants that must always hold AFTER the transition

# 1. Exposure must not exceed limit

invariant_A = new_exposure <= limit

# 2. PII must remain encrypted

invariant_B = pii_encrypted == True

# 3. Transmissions must be logged, meaning:

# If new transmissions occur, log_increment must be > 0

invariant_C = (new_transmissions > outbound_transmissions) == (log_increment > 0)

solver = Solver()

# Preconditions (the initial state must be valid)

solver.add(exposure >= 0, limit > 0, exposure <= limit)

# Search for violations of invariant_A

solver.add(Not(invariant_A))

if solver.check() == sat:

print("Counterexample found (Invariant A violated):")

print(solver.model())

else:

print("Invariant holds under the defined transition model.")

This pattern scales to full agent state machines.

Primitive 5: Integration With the Agent Runtime

Formal invariants are most powerful when enforced at runtime. This creates a pipeline where:

- The LLM proposes an action or plan

- The system simulates the effect as a state transition

- The invariants are validated

- The transition is committed only if valid

This matches the pattern introduced in Part 4 (Policy-as-Code) but adds mathematical rigor.

Example: runtime verified plan

def apply_plan(state: AgentState, plan: dict):

"""

Example plan containing fields:

- 'exposure_delta'

- 'requires_encryption'

"""

# 1. Encryption invariant

if plan.get("requires_encryption") and not state.pii_encrypted:

raise RuntimeError("Plan violated PII encryption invariant")

# 2. Exposure invariant enforced via atomic transition

return verified_transition(state, plan.get("exposure_delta", 0))

Primitive 6: Replay-Assisted Verification

Part 8 introduced deterministic replay as the only reliable way to reproduce complex agent behavior. Here, replay closes the loop on formal verification by making counterexamples actionable.

When Z3 or another solver finds a counterexample, it produces a model: a fully concrete set of variable assignments (for example, exposure, delta, pii_encrypted, outbound_transmissions). These assignments represent the exact conditions under which an invariant fails.

Replay-assisted verification ties this together:

- Extract counterexample model from the solver - Z3 outputs the offending values.

- Use the model to construct a synthetic TraceEvent sequence - Matching the Part 8 trace schema.

- Feed that trace into the deterministic replay engine - Engineers can then step through the violating run interactively.

- Understand precisely how the system failed - Review intermediate LLM decisions, tool outputs, and transitions.

Replay converts mathematical counterexamples into hands-on forensic tools.

Why This Matters

Formal verification elevates agent safety from “best effort” to mathematically grounded guarantees.

It enables:

- provable compliance for PII handling, financial risk limits, and safety constraints

- reproducible counterexample analysis via deterministic replay

- deep integration with identity (Part 3), policy enforcement (Part 4), and audit logs (Part 5)

- protection against model drift and adversarial manipulation

- behaviorally stable agent deployments

This primitive completes the foundation for trustworthy, autonomous agent systems.

Practical Next Steps

- Identify high-impact invariants to verify (PII rules, financial constraints).

- Build a formal state model and enumerated transitions.

- Use Z3 or TLA+ to search for counterexamples.

- Feed counterexamples into replay for forensic debugging.

- Integrate invariants into runtime transitions.

- Treat formal verification results as mandatory pre-deployment checks.

Part 10 will explore Secure Multi-Agent Protocols: Agents need a common, standardized way to talk to each other; authenticated, encrypted, and versioned. Right now it’s wild-west JSON over HTTP.