The Missing Primitives for Trustworthy AI Agents

This is another installment in our ongoing series on building trustworthy AI Agents:

- Part 0 - Introduction

- Part 1 - End-to-End Encryption

- Part 2 - Prompt Injection Protection

- Part 3 - Agent Identity and Attestation

- Part 4 - Policy-as-Code Enforcement

- Part 5 - Verifiable Audit Logs

- Part 6 - Kill Switches and Circuit Breakers

- Part 7 - Adversarial Robustness

- Part 8 - Deterministic Replay

- Part 9 - Formal Verification of Constraints

- Part 10 - Secure Multi-Agent Protocols

- Part 11 - Agent Lifecycle Management

- Part 12 - Resource Governance

- Part 13 - Distributed Agent Orchestration

- Part 14 - Secure Memory Governance

- Part 15 - Agent-Native Observability

- Part 16 - Human-in-the-Loop Governance

- Part 17 - Conclusion (Operational Risk Modeling)

Deterministic Replay (Part 8)

Debugging agent systems is fundamentally harder than debugging traditional software. Logs, metrics, and traces show you what happened, but they cannot reconstruct why it happened or the exact sequence of decisions that led to an unexpected outcome. Once an LLM produces a faulty or surprising plan in production, reproducing the exact path to that decision is functionally impossible without specialized tooling.

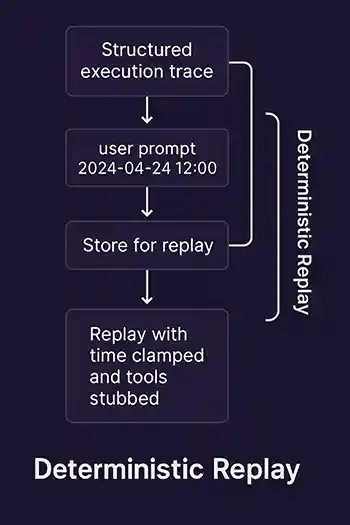

Deterministic replay gives teams the ability to reconstruct an agent run step by step, using recorded events to override nondeterminism and rebuild the exact execution path.

This section defines the primitives required for deterministic replay and shows how to build a practical replay harness for real systems.

Why Agent Debugging Is Non Deterministic

Agent behavior depends on multiple non deterministic inputs:

- LLM sampling: Temperature and sampling introduce inherent variability, and model versions shift over time.

- Tool nondeterminism : APIs and external tools may return different results on each invocation.

- System clock access : Calls to

time.time()ordatetime.now()depend on real time. To ensure fidelity during replay, these calls must be intercepted and replaced with recorded timestamps. This technique is known as time warping or clock virtualization and is required to guarantee that time dependent logic executes identically during replay. - Data drift: Datasets and structured data sources change, introducing nondeterminism when replayed.

- Concurrency and task ordering: In multi agent environments, the order of operations cannot be assumed to be stable.

- Prompt and configuration drift: Small changes to templates, hidden prompts, or config values can alter execution.

Deterministic replay collects these variables during the original run and substitutes them back in during replay.

Primitive 1: Structured Execution Trace

A deterministic replay system starts with one requirement: every meaningful step an agent performs must be captured as a structured, append only event. This is the only way to later reconstruct the exact execution path. Traditional logging is insufficient because logs are unstructured, lossy, and mix human oriented descriptions with machine oriented state. Deterministic replay requires something closer to event sourcing for agents.

To support reliable reconstruction, the trace must include the following information:

- LLM calls: Every interaction with the model must be recorded, including the prompt, sampling parameters, and the exact response returned. This is essential because LLMs are inherently nondeterministic. During replay, we must substitute the recorded responses verbatim.

- Tool calls: Any API call, file write, database query, or subprocess execution must be traced with both the request and response. Tools are a major source of nondeterminism because their outputs depend on external state. By recording tool inputs and outputs, we eliminate the need to query the real system during replay.

- Decisions: Agents make intermediate decisions: selecting a plan, choosing which tool to invoke, or determining the next action. These high level decisions often shape control flow, and must be recorded independently of LLM or tool events so that replay reproduces the control graph correctly.

- Model parameters:

Temperature,max_tokens,top_p, and other decode parameters influence the distribution of outputs. Recording these ensures we understand why a particular generation occurred and can validate that replay clients use matching configurations. - Tool versions: If the agent calls a versioned tool (for example, an internal API or library), the version must be recorded. During replay we must verify that the expected version is used. If not, the system can warn that replay may deviate from real execution.

- Timestamps: Event ordering and time dependent logic require stable timestamps. The trace must record the time of each event, and during replay calls to the system clock must be replaced with these recorded values (via time warping / clock virtualization).

- Structured inputs and outputs: To ensure deterministic interpretation, the trace should store inputs and outputs in consistent machine readable structures such as dictionaries. Free form text, while sometimes unavoidable, should be wrapped in structured envelopes.

Together these fields form the ground truth of the agent run: the canonical representation from which replay derives.

Why structured tracing is necessary

Without a structured, append only trace, the system cannot:

- reproduce LLM outputs

- simulate external tools

- enforce event ordering

- inspect intermediate agent decisions

- diagnose adversarial interference

- test new policies or model versions against real incidents

This makes deterministic replay impossible. The trace writer is therefore the conceptual and architectural center of the replay system.

Example: trace writer

The following code shows a minimal trace writer that captures each event in an execution trace. It serializes events as JSON Lines (JSONL), which is easy to append, stream, diff, and load incrementally. Senior engineers will recognize that this functions like a lightweight event log or write ahead log for agent execution.

The writer is intentionally simple: deterministic replay depends on the fidelity of recorded events, not the complexity of the system that records them.

import json

import time

import uuid

from dataclasses import dataclass, asdict

from typing import Any, Dict, Optional

@dataclass

class TraceEvent:

"""

A single structured event in an agent's execution trace.

Attributes:

run_id: Unique identifier for the agent run.

step_id: Sequential step number ensuring stable ordering.

timestamp: Logical or wall clock time captured at record time.

kind: Event type such as "llm_call", "tool_call", or "decision".

input: Structured input to the operation.

output: Structured output returned by the operation.

metadata: Additional context such as model_id or tool version.

"""

run_id: str

step_id: int

timestamp: float

kind: str

input: Dict[str, Any]

output: Dict[str, Any]

metadata: Dict[str, Any]

class TraceWriter:

"""

Append-only JSONL writer for deterministic replay.

Each call to record() generates a complete, immutable TraceEvent.

"""

def __init__(self, file_path: str, run_id: Optional[str] = None):

self.file_path = file_path

self.run_id = run_id or str(uuid.uuid4())

self.step_counter = 0

def record(self, kind: str, input: Dict[str, Any],

output: Dict[str, Any],

metadata: Optional[Dict[str, Any]] = None) -> TraceEvent:

"""

Create and append a new trace event.

The step_id increments monotonically, ensuring deterministic ordering.

Timestamps capture real or logical time and may later be replayed

via clock virtualization.

"""

self.step_counter += 1

event = TraceEvent(

run_id=self.run_id,

step_id=self.step_counter,

timestamp=time.time(),

kind=kind,

input=input,

output=output,

metadata=metadata or {},

)

# Append to JSONL

with open(self.file_path, "a", encoding="utf-8") as f:

f.write(json.dumps(asdict(event)) + "\n")

return event

Primitive 2: Stable Model and Tool Metadata

Deterministic replay is only possible when you can re-establish the exact execution context that produced the original agent behavior. For LLM-driven systems, this context includes far more than the prompt and output. A model invocation is defined by an entire configuration surface area: model identifier, decoding parameters, safety settings, and the version of the underlying model weights. If any of these differ between record time and replay time, the agent’s decision path may diverge.

Similarly, tool calls introduce nondeterminism unless their identity and configuration are captured. APIs evolve, backend services deploy new versions, and data sources change. During replay, using the wrong tool version would produce results that differ from the original run and invalidate the debugging session.

A robust deterministic replay system therefore must record every detail needed to reconstruct the model and tool environment for each step. This includes:

- Model identifier: LLM vendors update model weights, safety settings, and reasoning behavior frequently. Recording the model id (for example, gpt-4.1, internal-model-2025-01, or a fine tuned checkpoint hash) ensures replay validates that responses came from the expected version.

- Decode parameters: Temperature, top_p, top_k, max_tokens, presence penalties, and frequency penalties affect the token distribution. Even small deviations lead to different control flow. These must be recorded and replayed exactly.

- Safety configurations: Modern LLMs have safety settings, guardrails, or policy constraints that influence behavior. If these settings are not recorded, replay will not emulate the same inference path.

- Tool identifiers: If an agent interacts with a tool such as a REST API, SQL database, internal service, or Python function, the tool id must be recorded. This tells the replay harness which deterministic stub should handle the event.

- Tool versions: Backend tools change more frequently than LLMs. A schema migration, model update, or service deployment can change behavior in subtle ways. Capturing the tool version ensures replay validations and mismatches can be correctly surfaced.

- Tool configuration and arguments: Tool calls often depend on configuration (API keys, parameters) or nondeterministic arguments generated by the LLM. Recording the exact input sent to the tool makes the tool call reproducible even if the tool normally queries live state.

Together, these metadata fields form the execution signature of each step in the agent run. The replay engine uses this signature to decide whether replay conditions match the original environment and whether to issue warnings or abort the replay entirely.

Why metadata fidelity matters

Model and tool metadata are the glue that binds deterministic replay to real production constraints. Without precise metadata:

- replay output may diverge from the original run

- debugging conclusions may be misleading

- regression tests may inadvertently test against a different model

- policy changes cannot be validated against historical behavior

- forensics cannot reliably reproduce the incident

In short, recording this metadata converts the execution trace from an audit log into a replayable execution state machine.

Example: traced LLM wrapper

The following wrapper demonstrates how to capture a complete LLM call, including model id, decoding parameters, prompt, and response. The trace becomes a source of truth for replay clients.

class TracedLLMClient:

"""

Wraps an LLM backend and records all requests and responses.

"""

def __init__(self, backend, trace_writer: TraceWriter,

model_id: str, temperature: float = 0.0, max_tokens: int = 512):

self.backend = backend

self.trace = trace_writer

self.model_id = model_id

self.temperature = temperature

self.max_tokens = max_tokens

def generate(self, prompt: str) -> str:

result = self.backend.generate(

model=self.model_id,

prompt=prompt,

temperature=self.temperature,

max_tokens=self.max_tokens,

)

# Capture model configuration alongside inputs and outputs.

self.trace.record(

kind="llm_call",

input={"prompt": prompt},

output={"text": result},

metadata={

"model_id": self.model_id,

"temperature": self.temperature,

"max_tokens": self.max_tokens,

},

)

return result

Primitive 3: Replay Engine

Recording a structured trace gives you a chronological log of what happened. The replay engine is the component that transforms that log into a deterministic simulation environment. Its job is to take the recorded execution trace and inject those events back into the agent at replay time, replacing all nondeterministic operations.

Conceptually, the replay engine functions like the runtime of an event sourced system. Instead of calling the live LLM or live tools, the agent calls deterministic stubs which query the replay engine for the next recorded event. The replay engine then provides the exact input, output, and metadata that were captured during the original run.

This allows the agent to run entirely within a closed, deterministic world. All live dependencies are replaced by trace backed sources of truth.

For senior engineers, the replay engine must satisfy several correctness properties:

- Deterministic event ordering: The engine must return events in exactly the same order in which they were recorded. If a trace contains interleaved LLM calls, tool calls, and decisions, the replay engine must replay them in the correct sequence for each category of operation.

- Event type isolation: LLM calls, tool calls, decision events, and timestamps must be separated so that the replay stubs can request events of the correct type without ambiguity. For example, a ReplayLLMClient should only consume “llm_call” events, while a ReplayToolClient should only consume “tool_call” events.

- Cursor state: Replay must be stateful. Each class of event needs an independent cursor, tracking progress through its segment of the trace. This prevents events from being consumed out of order.

- Exhaustion detection: If the agent attempts to perform more operations than appear in the trace, the replay engine must fail loudly. Silent fallbacks to live systems would break determinism and invalidate the debugging session.

- Integrity and version checks: Each event includes metadata describing model_id, tool_id, version numbers, and configuration. The replay engine must enforce metadata consistency so that engineers know if the replay environment diverges from the original run.

These guarantees ensure the replay engine is not just a convenience layer but a true deterministic substrate under the agent runtime.

Example: trace loader and index

The following code shows two core components of the replay engine:

- load_trace: Reads all trace events for a given run_id into memory and sorts them deterministically.

- TraceIndex: Groups events by kind and provides a cursor so replay clients can fetch events sequentially.

This pair of components forms the backbone of deterministic behavior. All replay stubs rely on them to retrieve the correct recorded state.

def load_trace(file_path: str, run_id: str):

"""

Load and return all TraceEvent objects for the given run_id.

The result is sorted by step_id to ensure deterministic ordering.

"""

events = []

with open(file_path, "r", encoding="utf-8") as f:

for line in f:

data = json.loads(line)

if data["run_id"] == run_id:

events.append(TraceEvent(**data))

events.sort(key=lambda e: e.step_id)

return events

class TraceIndex:

"""

Provides deterministic, cursor-based access to trace events.

Events are grouped by kind (e.g., llm_call, tool_call, decision).

Each event kind has its own cursor so replay clients can request

the next appropriate event without interfering with others.

"""

def __init__(self, events):

self.by_kind = {}

for event in events:

self.by_kind.setdefault(event.kind, []).append(event)

# Independent cursor per kind guarantees isolation

self.cursors = {kind: 0 for kind in self.by_kind}

def next_event(self, kind: str) -> TraceEvent:

"""

Return the next event of the specified kind.

Raises an error if events are exhausted or unavailable.

"""

if kind not in self.by_kind:

raise RuntimeError(f"No events of kind '{kind}' in trace")

idx = self.cursors[kind]

events = self.by_kind[kind]

if idx >= len(events):

raise RuntimeError(f"Exhausted events of kind '{kind}'")

event = events[idx]

self.cursors[kind] += 1

return event

This minimal structure is intentionally simple because deterministic replay depends more on fidelity than sophistication. Engineers must be able to trust:

- that the events provided by replay are exact

- that ordering is stable

- that metadata is faithfully preserved

- that replay will fail loudly if the agent attempts an operation that was not captured

- that no hidden nondeterminism leaks into the replay environment

Together, load_trace and TraceIndex serve as the deterministic foundation for all higher abstractions: ReplayLLMClient, ReplayToolClient, the agent harness, and eventually regression testing.

Primitive 4: Deterministic Stubs for LLMs and Tools

Once an execution trace has been captured and indexed, the next requirement is to replace every nondeterministic dependency with deterministic, trace-backed components. These replacements are known as replay stubs. Their job is simple but foundational: instead of calling real models or live tools, they query the replay engine and return the exact outputs recorded during the original run.

To an engineer, this mirrors how tests isolate external systems in distributed environments. Just as microservices are tested with mock clients or in-memory fakes, agent systems require deterministic substitutes for LLMs and tools to guarantee that replay does not leak nondeterminism back into the execution path.

Replay stubs must satisfy several engineering constraints:

- Strict determinism: Replay stubs must never call the live model, hit a real API, read from a database, or depend on the system clock. All outputs must come directly from the trace.

- Event-type isolation: Each stub must request specific event kinds (for example “llm_call” or “tool_call”) from the replay engine. This prevents accidental consumption of unrelated events, which would break re-execution consistency.

- Input consistency validation: Optional but recommended. Replay stubs can validate that inputs during replay match the recorded inputs. This helps engineers detect cases where control flow diverged from the recorded run.

- Metadata verification: Stubs should verify model ids, tool ids, version numbers, and configuration metadata. If the environment has drifted since the original run, the stub should surface this mismatch immediately.

- Failure on exhaustion: If the agent attempts to perform additional calls that were not captured in the original trace, the replay engine must throw an error. Continuing silently would produce a partial or misleading reproduction.

Replay stubs turn the trace into a deterministic “execution oracle,” ensuring the agent sees exactly what it saw at record time.

Replay Stubs in Practice

A replay system must provide deterministic versions of:

- LLM client: Returns the recorded model output token-for-token.

- Tool client: Returns the recorded tool output (API response, file result, SQL query output, etc).

This transforms the entire agent runtime into a closed deterministic environment that behaves identically on each replay.

ReplayLLMClient

The ReplayLLMClient replaces the real LLM with a stub that reads from the trace.

If the agent asks for an LLM generation, it does not call the model. It asks the TraceIndex for the next “llm_call” event and returns its recorded output.

This guarantees deterministic reproduction of model behavior without the variability of temperature sampling, distribution drift, or model updates.

class ReplayLLMClient:

"""

Deterministic stub that replays recorded LLM responses.

"""

def __init__(self, trace_index: TraceIndex, model_id: str):

self.index = trace_index

self.model_id = model_id

def generate(self, prompt: str) -> str:

event = self.index.next_event("llm_call")

# Validate that this replay is aligned with the recorded model.

if event.metadata.get("model_id") != self.model_id:

raise RuntimeError("Model id mismatch during replay")

# Optional: validate prompt similarity if strict replay is desired.

return event.output["text"]

Engineer’s note: This approach does not require prompting the model or re-running any token generation. It emulates the exact decision chain by reusing recorded outputs. This eliminates nondeterminism and ensures debugging sessions produce identical reasoning paths.

ReplayToolClient

Tools introduce even more nondeterminism than LLMs: external APIs, databases, file operations, and long-running services all have state that drifts over time. Replay must avoid touching these real systems.

ReplayToolClient fills this gap by replaying only the recorded tool outputs. During replay, tool invocations become pure functions backed entirely by the trace.

class ReplayToolClient:

"""

Deterministic stub that replays recorded tool responses.

"""

def __init__(self, trace_index: TraceIndex, tool_id: str):

self.index = trace_index

self.tool_id = tool_id

def execute(self, plan: str) -> str:

event = self.index.next_event("tool_call")

# Ensure the recorded tool identity matches expectations.

if event.metadata.get("tool_id") != self.tool_id:

raise RuntimeError("Tool id mismatch during replay")

# Optional: validate the plan or arguments for strict replay.

return event.output["result"]

Engineer’s note: Tool calls often introduce hidden time, randomness, or version drift. ReplayToolClient eliminates all of these factors, turning the agent’s tool interactions into deterministic, replayable steps.

Why Deterministic Stubs Are Essential

Replay stubs allow you to:

- debug complex agent failures without touching external systems

- re-evaluate agent performance under new policies or model versions

- investigate adversarial incidents (see Part 7)

- test control flow without risk to production infrastructure

- isolate whether a failure came from the LLM, the tool, or agent logic

Without deterministic stubs, replay is incomplete, and engineers are left attempting to reconstruct behavior through logs alone.

Replay stubs turn recorded traces into a fully controlled sandbox for debugging, testing, and governance.

Primitive 5: Deterministic Agent Harness

With structured traces (Primitive 1), deterministic metadata capture (Primitive 2), and replay stubs for all nondeterministic components (Primitive 4), we can build a deterministic agent harness: an execution environment where the agent can run in either:

- Record mode — interacting with real LLMs, real tools, real data, while capturing everything into a trace.

- Replay mode — interacting exclusively with deterministic stubs that feed back recorded results from the trace.

A well-designed harness ensures the agent itself remains unchanged. The agent logic, state machine, or task orchestration operates as-is. Only the dependencies around it change. This provides two major advantages:

- Zero-intrusion debugging: You do not need to rewrite the agent to debug it. You simply swap the LLM and tool clients, and the agent proceeds through its workflow, consuming recorded outputs deterministically.

- Binary equivalence between record and replay: If the agent code is unchanged, any divergence during replay signals a real correctness issue: a control-flow fork, prompt drift, misalignment between planning logic and execution logic, or an untraced dependency. Engineers get an immediate signal that something in the system is unstable or implicitly nondeterministic.

- Full coverage of agent behavior: Replaying an agent deterministically allows engineers to inspect intermediate decisions, verify model-to-tool interactions, run adversarial forensics, and apply policy changes to past runs.

The importance of structured LLM outputs

A replay harness is only useful if the agent behaves the same way under record and replay. One of the most common sources of hidden nondeterminism is the LLM “plan” output used to construct the next tool call.

If the model returns free-form text and the agent parses it heuristically (regex, keyword matching, pattern extraction), then minor differences between runs can cause:

- different tool arguments

- different tool selection

- branching into different actions

- inconsistent parsing

- diverging control flow during replay

To avoid this, the plan must be emitted in a fully structured and deterministic format.

In production, the LLM output that determines the tool call (the plan) should be emitted in strict structured form such as JSON or a Pydantic model. This guarantees that tool invocation is fully deterministic, parseable, and replayable. It also ensures the replay trace captures semantically meaningful structures rather than brittle free-text instructions.

Example: agent class

The following simplified agent example demonstrates a typical three-step workflow:

- Use an LLM to generate a structured plan.

- Execute a tool based on the plan.

- Use the LLM to summarize or synthesize results.

Because the agent’s LLM and tool clients are injected, the same code path supports both record mode and replay mode transparently.

class Agent:

"""

Example agent workflow:

1. LLM plan

2. Tool execute

3. LLM summarize

This class is intentionally minimal. Its determinism relies entirely on

the behavior of the injected clients and the structure of the recorded trace.

"""

def __init__(self, llm_client, tool_client, trace_writer: TraceWriter):

self.llm = llm_client

self.tool = tool_client

self.trace = trace_writer

def run(self, user_query: str) -> str:

# Step 1: Ask the LLM to create a structured plan.

plan = self.llm.generate(f"Plan a response for: {user_query}")

# Record the decision explicitly.

self.trace.record(

kind="decision",

input={"user_query": user_query},

output={"plan": plan},

metadata={},

)

# Step 2: Execute the plan using a tool.

tool_result = self.tool.execute(plan)

# Step 3: Ask the LLM to summarize the tool result.

summary = self.llm.generate(

f"Summarize the tool result: {tool_result}"

)

return summary

Why this harness design matters

This form of dependency injection accomplishes several important engineering goals:

- Determinism: During replay, LLM and tool calls are fetched exclusively from the trace. No external nondeterminism can leak in.

- Consistency: The agent’s actual logic remains unchanged between record and replay. This ensures that debugging is accurate and that regressions detected via replay reflect real-world control-flow differences rather than artifacts of a modified code path.

- Runtime symmetry: Record mode and replay mode share the same agent implementation. Engineers can trust the replay results because the agent is executing the same logic in both cases.

- Testability: The harness behaves like a pure function of trace input. Given the same trace, the agent must produce the same deterministic output every time.

- Safety and governance integration: Because the harness integrates with the trace writer, it creates a unified timeline of decisions, tool usage, and final results that can be tied back to audit logs, policy decisions, or adversarial detection layers.

Primitive 6: Governance Integration

Deterministic replay is not just a debugging primitive. It is a foundational capability for AI governance, operational assurance, and post-incident forensics. In a mature agent platform, replay does not sit aside from the safety stack. It is woven directly into it.

A well-governed agent ecosystem relies on trace-level visibility to validate compliance, diagnose failures, and reconstruct complex multi-step interactions across agents, tools, and policy layers. When combined with the primitives introduced in earlier parts of this series, deterministic replay becomes a unifying mechanism that ties together identity, policy, audit logging, and observability into a coherent whole.

A senior engineer should view replay not as an isolated debugging feature but as the glue layer connecting operational reliability with trust and compliance requirements.

Replay integrates with other primitives in the following ways:

1. Audit logs (Part 5)

The execution trace and audit log should share the same run_id. This creates a single source of truth where:

- trace events provide step-level execution detail

- audit events provide compliance and lineage detail

Together, these form a two-dimensional record: what the agent did and why it was allowed to do it.

Replay leverages this linkage to correlate decisions with authorization checks, which is essential for post-incident analysis.

2. Kill switches and circuit breakers (Part 6)

Kill switch or breaker activations should also be recorded as trace events. This allows the replay engine to surface moments when a safety layer intervened.

During analysis, engineers can:

- verify whether breakers fired at the correct threshold

- simulate different breaker configurations by replaying historical runs

- validate that kill-switch logic interacts correctly with updated policies

Replay makes it possible to test these changes safely using historical real-world bursts, spikes, or anomalies.

3. Identity and attestation metadata (Part 3)

Every LLM call, tool call, or decision should include the identity of the calling agent. Replay must preserve:

- the SPIFFE or SVID identity

- the agent’s attestation

- any identity-scoped configuration

This matters for governance because identity dictates:

- which policies apply

- which resources the agent is allowed to access

- how suspicious actions should be interpreted

During replay, identity metadata allows teams to reproduce the exact trust boundary in place during the original incident.

4. Policy evaluations (Part 4)

Policy checks should be surfaced as explicit events in the trace. For example, an agent action that required OPA evaluation should result in:

kind: "policy_check"

input: { action, agent_id, payload }

output: { decision, reason }

This allows replay workflows to:

- validate policy behavior over time

- detect regressions in policy logic

- replay historical policy decisions under new rules for impact analysis

- identify when a misconfiguration caused an agent to be allowed or denied incorrectly

Replay becomes a governance tool that explains policy decisions, not just code behavior.

5. Adversarial detection signals (Part 7)

Adversarial flags, filtered inputs, classifier scores, and probe detection events should also be part of the trace.

These signals allow replay to reconstruct:

- how an adversarial input propagated

- how safety filters handled it

- whether a misclassification or bypass occurred

- where in the pipeline the failure originated

Replay becomes a forensic tool capable of reconstructing adversarial events end-to-end.

6. Complete behavioral lineage

When all the above are combined, deterministic replay provides a complete behavioral lineage for every agent run:

- Who the agent was (identity, attestation)

- What decisions it made (LLM and plan outputs)

- What policies applied (OPA or equivalent checks)

- What tools it invoked (versioned, replayable calls)

- Which safety layers triggered (breakers, kill switches, adversarial detectors)

- What the final result was

This makes deterministic replay the unifying primitive for trustworthy AI. It bridges operations, forensics, governance, safety, and debugging without requiring divergent tooling.

Why this integration matters

For senior engineers responsible for reliability and risk:

- Replay enables post-incident reproducibility

- Governance teams gain transparent, verifiable behavioral records

- Security teams gain a mechanism for adversarial incident reconstruction

- Platform teams gain historical regression datasets

- Architects gain a tool for policy effect simulation across historical workloads

Replay is not an observability add-on. It is a structural requirement for future agent systems that operate with autonomy and high-stakes decision making.

Primitive 7: Deterministic Regression Testing

Deterministic replay does more than enable one-off debugging. It opens the door to a new category of automated testing for agentic systems: replay-driven regression testing, often referred to in traditional software development as golden file testing or snapshot testing. In agent environments, however, the stakes and complexity are far higher.

Agents behave as distributed reasoning engines, combining LLM planning, policy checks, tool use, and intermediate decision steps. Their output is not a single function of the input. It is a temporal chain of reasoning and external effects, any part of which may shift subtly when models, configuration, or policies drift.

Deterministic regression testing allows engineering teams to use historical traces as frozen behavioral baselines, ensuring that:

- changes to prompts

- upgrades to model versions

- policy adjustments

- tool migrations

- or safety-layer modifications

do not unintentionally change how agents behave on real-world workloads.

This solves a fundamental problem in modern ML systems: we cannot rely on unit tests alone because the outputs of LLM-driven agents are emergent, contextual, and highly sensitive to upstream configuration. Instead, we use deterministic replay to treat historical agent behavior as a stable contract and verify that future behavior does not violate it.

What deterministic regression testing provides

1. Behavior as a stable artifact

Instead of asserting that “the agent should respond like X,” regression tests assert, “When replaying a specific historical run, the agent must behave exactly as it did before.”

This aligns testing with reality rather than an expectation codified by humans.

2. Policy and model upgrade safety

If a new model release or policy configuration alters behavior during replay, engineers can compare the new output with the old one and determine whether the change is intentional or a regression.

3. Adversarial reproduction

Historical adversarial interactions (as captured in Part 7) can be replayed to confirm defenses are effective under new model versions.

4. Safety net against subtle drift

Even small changes in prompts or model defaults can materially alter behavior. Replay-based regression testing highlights these changes without requiring manual inspection.

5. Auditable, reproducible evidence

Replay produces deterministic outputs that can be archived, versioned, or compared over time, enabling auditability and long-term behavioral stability guarantees.

Example: simplified regression harness

The following harness demonstrates how to perform deterministic regression testing using the previously defined replay components.

The workflow is:

- Load the trace from disk.

- Create a TraceIndex for deterministic cursor-based access.

- Plug deterministic replay stubs into the agent harness.

- Choose the historical user input from the trace.

- Run the agent deterministically.

- Assert properties of the final output, simulating golden file testing.

def run_regression(trace_path: str, run_id: str):

"""

Deterministically replay a historical run and verify expected behavior.

This is a form of golden file or snapshot testing, where the replayed

output is compared against known good historical behavior.

"""

# Load and index historical trace

events = load_trace(trace_path, run_id)

index = TraceIndex(events)

# Create deterministic replay stubs for LLM and tools

replay_llm = ReplayLLMClient(index, model_id="internal-model-2025-01")

replay_tool = ReplayToolClient(index, tool_id="example-tool")

# Replay mode does not write new trace events

trace_writer = TraceWriter("/dev/null", run_id=run_id)

# Agent instance wired entirely to deterministic components

agent = Agent(replay_llm, replay_tool, trace_writer)

# Extract the original user query from the first decision event

decision_events = [e for e in events if e.kind == "decision"]

if not decision_events:

raise RuntimeError("No decision event found in trace")

user_query = decision_events[0].input["user_query"]

# Perform a full deterministic re-execution

result = agent.run(user_query)

# Golden file style assertion

# If this property fails, it indicates behavioral drift.

assert "ERROR" not in result

return result

Why deterministic regression testing is essential

For senior engineers responsible for reliability, governance, and ML platform stability, deterministic regression testing provides unique assurances:

- Behavioral invariance: If behavior changes, you know it changed, when, and under what configuration.

- Impact analysis: You can replay thousands of historical runs against a new model or new prompts to evaluate impact before production rollout.

- Safety and policy correctness: You can verify that changes in OPA rules, prompt guardrails, or kill-switch thresholds do not produce unexpected agent behavior.

- Production-grade adversarial hardening: Captured adversarial traces become a permanent test suite, protecting the system against regression vulnerabilities.

- Confidence in controlled deploys: Before rolling out new model versions, you can deterministically replay a representative corpus of past traffic to ensure the new model behaves as expected.

Why This Matters

Deterministic replay closes the final observability gap in agent systems. It gives engineering teams the ability to:

- reliably reproduce errors

- inspect internal decision chains

- analyze adversarial inputs with precision

- validate policy changes across historical workflows

- run snapshot tests using real world traffic

Combined with the previous primitives, deterministic replay completes the foundation for trustworthy AI agents.

Practical Next Steps

- Wrap all LLM and tool calls with trace recording wrappers.

- Apply time warping or clock virtualization during replay.

- Require structured outputs from LLM planning steps.

- Treat execution traces as golden files for regression testing.

- Integrate replay into your on call and forensic workflows.

Part 9 will explore dynamic conformance checking and test time safety guarantees.