The Missing Primitives for Trustworthy AI Agents

This installment continues our exploration of the primitives required to build predictable, safe, production-grade AI systems:

- Part 0 - Introduction

- Part 1 - End-to-End Encryption

- Part 2 - Prompt Injection Protection

- Part 3 - Agent Identity and Attestation

- Part 4 - Policy-as-Code Enforcement

- Part 5 - Verifiable Audit Logs

- Part 6 - Kill Switches and Circuit Breakers

- Part 7 - Adversarial Robustness

- Part 8 - Deterministic Replay

- Part 9 - Formal Verification of Constraints

- Part 10 - Secure Multi-Agent Protocols

- Part 11 - Agent Lifecycle Management

- Part 12 - Resource Governance

- Part 13 - Distributed Agent Orchestration

- Part 14 - Secure Memory Governance

- Part 15 - Agent-Native Observability

- Part 16 - Human-in-the-Loop Governance

- Part 17 - Conclusion (Operational Risk Modeling)

Secure Memory Governance (Part 14)

Agent systems are moving beyond stateless “prompt in, answer out” interactions into long-lived reasoning (ok, probabilistic) systems with:

- ephemeral scratchpads

- session-level working memory

- multi-step workflow state

- shared embedding spaces

- multi-tenant long-term memory

- user-specific preference profiles

- cross-workflow knowledge stores

- vector memories that shape planning

Most organizations treat this memory layer as an implementation detail - a vector DB, a key-value store, or a cached JSON blob. In reality, memory is a security boundary.

It contains the most sensitive state in an agent system, outlives individual workflows, and silently influences future behavior. A compromised or poorly governed memory layer leads to:

- data leakage

- unbounded retention

- poisoning and contamination

- schema drift

- cross-tenant exposure

- nondeterministic behavior

- model drift or “haunted” agents

This post defines the primitives required to make agent memory trustworthy.

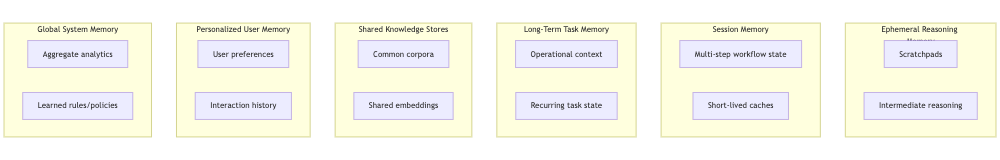

Memory Layers (Concept Diagram)

Primitive 1: Memory Layer Classification

A mature system must explicitly classify its memory. Each layer has different durability, sensitivity, and governance needs.

Ephemeral Reasoning Memory

- Short-lived agent scratchpads: intermediate reasoning, tool outputs, draft plans.

- Should never persist beyond the execution window.

Session Memory

- State across steps of a single workflow (retrieved docs, partial results).

- Automatically deleted at workflow completion.

Long-Term Task Memory

Stores non-PII, multi-session operational state. Examples:

- workflow checkpoints

- project-level context

- execution preferences

- recurring task state

This is about operational continuity, not long-term knowledge. Sensitive cross-team or cross-user knowledge belongs in Shared or Personal layers.

Shared Knowledge Stores

High-risk, high-blast-radius stores: shared embeddings, common corpora. One poisoning event here affects the entire system.

Personalized User Memory

User-specific preferences, histories, private data. Requires strict tenancy boundaries, deletion workflows, and access control.

Global System Memory

Fact tables, ranking signals, policy hints, learned aggregates. Corruption here changes everything.

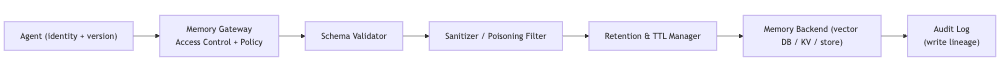

Primitive 2: Access Control, Isolation, and Tenancy Boundaries

Memory must be treated as a secure API. Every read/write must be authorized according to:

- agent identity

- agent version

- tenant

- workflow context

- trust domain (region/security boundary)

- memory layer

The memory system should enforce:

- per-layer RBAC

- per-tenant isolation

- region-scoped access

- per-agent capability restrictions

- strict scoping of retrieval operations

This eliminates:

- cross-tenant leakage

- accidental contamination

- covert memory exfiltration

- agents accessing memory they should never see

Primitive 3: Schema Governance and Versioned Memory Contracts

As agents evolve, their memory structure must evolve too. Without governance, schema drift leads to silent corruption.

Memory schemas must be:

- explicitly defined (Pydantic/Protobuf)

- versioned

- compatible across versions (or migrated)

- validated on every write

- validated on every read

Example: Versioned Pydantic Schemas

from pydantic import BaseModel, Field

from typing import List, Optional

class UserMemoryV1(BaseModel):

version: int = 1

interests: List[str]

last_search: Optional[str] = None

class UserMemoryV2(BaseModel):

version: int = 2

interests: List[str]

search_history: List[str] = Field(default_factory=list)

embedding_vector: List[float] # dimensionality MUST match embedding model version

Primitive 4: Memory Retention, Expiry, and Compliance

Memory must not accumulate indefinitely. Every layer needs explicit retention and deletion policies.

A trustworthy system enforces:

- Per-layer TTL/expiry - Automatic cleanup for session and short-lived memory.

- Per-tenant retention requirements - Some customers demand strict retention windows.

- Right-to-be-forgotten workflows - Targeted deletion of personal or sensitive data.

- Cryptographic deletion - When data is stored in append-only or immutable systems (e.g., verifiable logs or shared immutable corpora), deletion is impossible. Revoking the encryption key becomes the strongest form of data erasure rendering the memory unreadable forever.

- Legal holds - Prevent automated deletion when required.

- Audit logs - Every deletion must be recorded and verifiable.

Memory Governance Pipeline Diagram

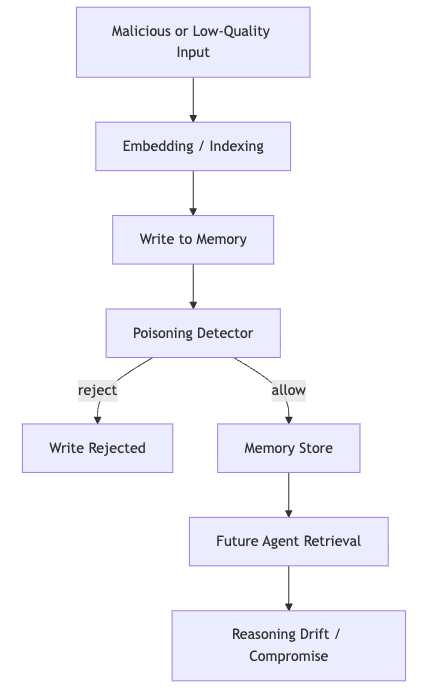

Primitive 5: Memory Poisoning Detection and Sanitization

Memory poisoning is the long-term version of prompt injection - an attacker inserts adversarial content into memory so future agents retrieve and trust it.

Mitigation must occur at:

- insertion (sanitization, provenance, classification)

- retrieval (reranking, anomaly detection)

- lineage (strong audit trails)

Example: Sanitization with Safe Error Handling

class MemorySanitizationRejected(Exception):

"""Raised when memory content fails sanitation checks."""

pass

def sanitize_memory_entry(text: str) -> str:

clean = text.encode("ascii", "ignore").decode()

if len(clean.strip()) == 0:

raise MemorySanitizationRejected(

"Memory write rejected: Failed content policy check."

)

return clean

Avoid revealing why content failed - doing so is an information leak.

Poisoning Flow & Defense Diagram

Primitive 6: Compartmentalization and Memory Sandboxing

Memory must be segmented, not monolithic.

Sandboxing isolates memory by:

- tenant

- agent

- workflow

- region

- risk tier

- memory layer

This prevents:

- low-trust agents writing to high-trust stores

- contamination of global memory

- cross-customer leaks

- experimental workflows affecting production behavior

Sandboxing can be:

- logical (namespaces, partitions)

- physical (separate clusters)

- contextual (ephemeral workflow memory)

Primitive 7: Memory Governance in the Orchestrator

The orchestrator (Part 13) is the only component with enough context to enforce memory governance globally.

It knows:

- the agent identity and version

- workflow context

- tenant boundaries

- resource budgets

- policy constraints

- risk tier

- region and trust domain

It can therefore:

- authorize memory reads/writes

- validate schemas

- enforce retention

- apply poisoning checks

- enforce quotas

- log lineage (Part 5)

- integrate with replay (Part 8)

- ensure memory events meet formal invariants (Part 9)

This makes memory a governed subsystem, not a side effect of agent behavior.

Why This Matters

As soon as agents store state, memory becomes the long-tail risk of the system. Poorly governed memory leads to silent failures - the kind that do not appear immediately but manifest weeks later as drift, bias, leakage, or inexplicable agent behavior.

Secure memory governance transforms memory from a vague, unstructured blob into a well-defined, auditable, compliant subsystem. It makes retention explicit, access controlled, schemas governed, poisoning mitigated, and cross-tenant boundaries enforceable.

And crucially, memory provenance - the record of who wrote what, when, and under which policy - is the essential input for Workflow Lineage, the core focus of Part 15: Agent-Native Observability. Without trustworthy provenance, lineage graphs and reasoning traces are incomplete or misleading.

Practical Next Steps

- Inventory and classify all memory locations: Identify scratchpads, caches, vector DBs, key-value stores, logs, and profile data.

- Define access rules per layer: Who can read/write? Which workflows? Which tenants?

- Version your memory schemas Use Pydantic or Protobuf. Include explicit version fields.

- Implement retention and deletion: Start with TTL for session memory. Add cryptographic deletion.

- Sanitize and validate all writes: Enforce content policy checks. Attach provenance.

- Route memory access through a gateway: Never let agents talk to memory backends directly.

- Plan response playbooks for poisoning events: Tie them into replay and audit logs for forensic analysis.

Secure memory is the substrate on which safe agents operate.

Part 15 will build on this by introducing Agent-Native Observability: reasoning traces, workflow lineage, divergence metrics, and provenance graphs - all dependent on the governed memory layer defined here.